We designed solutions to improve accessibility in Google Voice Assistant for 2 millions 65-year-old elders with speech disabilities.

Context:

Current app failed to support the communication needs of individuals with diverse speech patterns and speech disabilities.

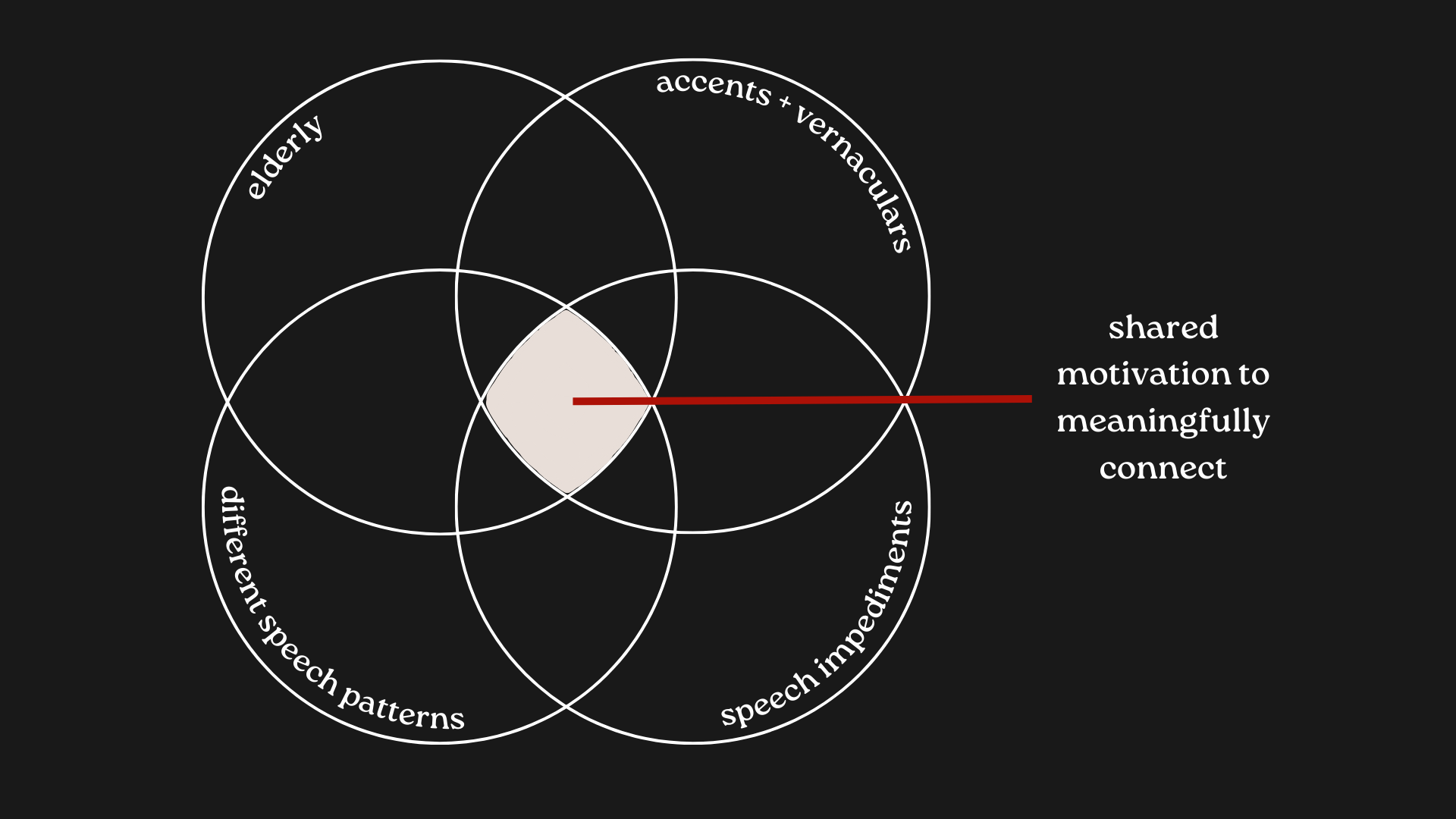

Individuals such as the elderly, those with accents or vernaculars, different speech patterns, and speech disabilities face a shared challenge in meaningfully connecting through technology. However, current Android built-in apps have not effectively supported these needs due to a lack of tailored speech recognition capabilities and user-specific customization options.

Who is being affected? Millions of elders 65+ and people with language disabilities struggle to navigate apps.

Who did I design for?

I designed for users aged 65 and older, and individuals with special speech features, including slower speech rates, longer inter-syllabic silences, changes in voice patterns, increased hoarseness, slurred speech, and interrupted speech.

Most importantly - Designed for my loved ones

Drawing inspiration from my own experiences and those of my loved ones, such as how my parents and grandparents struggled to use tech devices, I found myself asking many more "what if" and "how might we" questions to resolve the their pain points:

How Might We:

Help elders meaningfully connect and communicate with others through technologies?

First and foremost motivation: Social connection

Pain point 1: Elders want to meaningfully connect with their children, grandchildren, friends, co-workers, and others through information access and self-expression, thereby preventing isolation

How Might We:

Provide a more pleasant experience and greater accuracy with technology interactions?

Pain point 2: Elders with visual impairments and limited technical intelligence often struggle to navigate touch-sensitive technology and other complex technologies on their own.

Designing Speech Recognition in Google Assistant

Justifying the Why: Why Did I Target Speech Recognition?

-> Prefer Speech over Text: Users fully utilize a product or service through spoken commands.

Throughout the design and research process, I found that the above pain points can be addressed with speech recognition, which is also beneficial for emergency communication and hands-free use cases (e.g., physical constraints, literacy levels).

How did I Design the Voice Assistant?

Design Deliverable 1: Age and Tutorial Customization: During the setup of a new device (e.g., phone or tablet), we prompt the user to input their age. Based on their age group, we suggest relevant video tutorials and provide intuitive tips.

UI Deliverable 2: Balance Configuration for Time Lag: Users have the option to communicate their typical speaking speed to the system. The goal is to balance the delay so it’s not too long or too short, accommodating the user's natural speaking pace while ensuring a smooth and efficient interaction.

Who Did I Worked with?

I had the opportunity to work on-site with talented Google researchers and scholars on UX design methods for inclusion and equity, directly enhancing Google products @ Sunnyvale, CA.

What I Learned

- "A single solution does not exist - multiple approaches are needed" from “Designs for the Pluriverse” by author Arturo Escobar

Throughout the design process, I embraced this idea by continually exploring different possibilities and what-if scenarios. This mindset encouraged me to push beyond just one single solution and communicate with my teammates.

- Design for the margins to account for the middle

The design process centers on the elderly community, the most impacted and marginalized users, from ideation to production. However, by designing for the most marginalized or impacted users—like the elderly, who often face significant barriers to technology—we inherently address more extreme needs. This process forces me to think of solutions that are more flexible, intuitive, and accessible. In real life, I learn that these solutions tend to work well for a wider audience, because if a design works for users with the most challenging needs, it is likely to be effective for those with less stringent requirements.